University of Notre Dame

Industry: University Research

System: Ascension Technology Motionstar

When people converse, they use head motions, postures and gestures that convey information. Researchers have hypothesized that individuals coordinate their movements while they talk in a sort of “conversational dance.” Dr. Steven M. Boker of the University of Notre Dame’s Department of Psychology has been using motion tracking to study this conversational dance along with other aspects of human movement.

Dr. Boker’s lab has been pursuing three main experimental tracks: One is a study of interpersonal coordination of movement during two-person conversations. The second examines interlimb and interpersonal coordination of movements during a one- or two-person dance. The third is research into whether people learn motions by imitation of visually perceived motion.

These studies use a sixteen sensor Ascension Technology MotionStar system to collect movement data. According to Dr. Boker, MotionStar was chosen because it poses no occlusion problems and has a very high sample rate to meet their requirements. And because their experiments required orientation data, the lab needed a 6 degrees-of-freedom tracker.

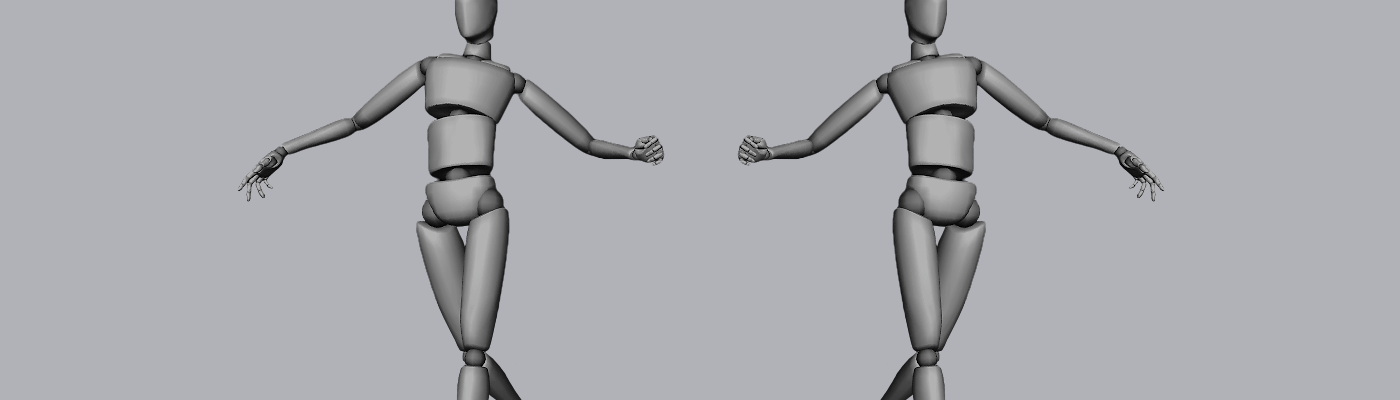

In the dance research project, Symmetry Building and Symmetry Breaking in Synchronized Movement, the lab sought basic answers to questions about mirror systems—behavioral patterns linking perceived movements to duplication of those movements. Pairs of male and female undergraduates outfitted with MotionStar sensors were given lead and follow instructions during brief dance segments in which the velocity of their movements were tracked. A strong correlation between individuals’ movements when they were both told to lead showed that entrainment [pulling or dragging along] of cyclic movement between individuals is especially easy. This may explain the near universal use of cyclic head movements to nonverbally indicate agreement or disagreement.

The experiment concluded that The primary information that needs to be communicated nonverbally by a listener during conversation, understanding or misunderstanding, would be likely communicated by a method that would allow the quickest recognition of entrainment between individuals.

“The practical implications of these findings are many. “Three applications that come to mind are diagnostics for communicative and affective disorders (such as autism), training for psychological counselors (in order to help them be more effective in both perceiving and producing nonverbal cues), and training for teachers using distance education (videoconferencing) technologies,” says Dr. Boker.

We are also working with a group that is interested in modeling affective (emotional states) communication to be applied to coordinate the movements of robots and computer generated avatars to display more life-like behavior during conversational interactions.

He adds.

A new experiment scheduled for later in 2003 will use MotionStar in a study of postural control and aging. The lab has developed a room with walls that move on two axes under computer control. “We will be studying how visual perception and proprioception—the sense you have of where your limbs are—are coupled together while performing a set of balance tasks,

Dr. Boker explains.

We hope to better understand how, as aging occurs and the senses are less acute, our postural control system reoptimizes itself. We hope that we can devise strategies and training that might delay the onset of increased probability of falling.

Dr. Boker is a faculty member in the Quantitative Psychology Program at Notre Dame. To learn more about the Boker Lab’s use of motion tracking to examine what our movements mean to us, visit www.nd.edu/~sboker.